集群爆炸,但是修好了

周一到公司来一看,本地集群连不上了,据说是周末停电关了服务器造成的。既然环境爆炸那啥也干不了只能挂机了, 那可不行,赶快修好了开始干活。

- 首先ssh能通,至少虚拟机没问题,试试docker ps。有反应,但容器全都停在pause上。同时kubectl get node卡住没有回应。

1

2

3

4

5

6

7[root@vmw253 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

949258e9135c 37c6aeb3663b "kube-controller-man…" 3 hours ago Up 3 hours k8s_kube-controller-manager_kube-controller-manager-vmw253_kube-system_b53e0e776bdd23d5be83ec232a105a76_1036

4a3a50cbf1fb registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1 "/pause" 3 hours ago Up 3 hours k8s_POD_kube-controller-manager-vmw253_kube-system_b53e0e776bdd23d5be83ec232a105a76_11

89aa599bc953 registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1 "/pause" 3 hours ago Up About an hour k8s_POD_kube-apiserver-vmw253_kube-system_6aa730f99776180fbac39af544cc5c43_11

76914665acbd 56c5af1d00b5 "kube-scheduler --au…" 3 hours ago Up 3 hours k8s_kube-scheduler_kube-scheduler-vmw253_kube-system_ceb9697816522e2427509f5707b24a58_1007

8248bd5e395d registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1 "/pause" 3 hours ago Up 3 hours k8s_POD_kube-scheduler-vmw253_kube-system_ceb9697816522e2427509f5707b24a58_11 - 求助运维老哥,老哥看了下现场,说api-server不见了,可能是etcd爆炸,叫检查下etcd。因为使用kubesphere脚本部署的,所以也不知道etcd怎么启动的,检查了一圈,没有发现etcd的影子。

- ps -ef时意外发现系统定时任务正在拉起etcd的备份任务,由此确定etcd应该是由systemctl管理,随后crontab -l结果如下

1

2

3

4[root@vmw253 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate ntp3.aliyun.com &>/dev/null

0 3 * * * ps -A -ostat,ppid | grep -e '^[Zz]' | awk '{print }' | xargs kill -HUP > /dev/null 2>&1

*/30 * * * * sh /usr/local/bin/kube-scripts/etcd-backup.sh - 检查etcd-backup.sh,发现了备份所在地,从环境变量中取出了数据目录

1

2

3

4

5

6

7

8

9

10

11# 此行声明了备份地址

BACKUP_DIR="/var/backups/kube_etcd/etcd-$(date +%Y-%m-%d-%H-%M-%S)"

# 此行引用了数据目录变量

export ETCDCTL_API=2;$ETCDCTL_PATH backup --data-dir $ETCD_DATA_DIR --backup-dir $BACKUP_DIR

# 此行调用etcdctl进行备份 (重要-1)

{

export ETCDCTL_API=3;$ETCDCTL_PATH --endpoints="$ENDPOINTS" snapshot save $BACKUP_DIR/snapshot.db \

--cacert="$ETCDCTL_CA_FILE" \

--cert="$ETCDCTL_CERT" \

--key="$ETCDCTL_KEY"

} > /dev/null - 检查etcd-backup.sh,发现从环境变量中取出了备份地址,然后从sytemctl status etcd中取到etcd配置,再从etcd配置取到env文件地址(/etc/etcd.env)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27# 因为写的时候已经把etcd修好了,所以Active是running

[root@vmw253 ~]# systemctl status etcd

● etcd.service - etcd

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2023-07-10 15:06:18 CST; 22min ago

Main PID: 36781 (etcd)

Tasks: 25

Memory: 97.8M

CGroup: /system.slice/etcd.service

└─36781 /usr/local/bin/etcd

[root@vmw253 ~]# cat /etc/systemd/system/etcd.service

[Unit]

Description=etcd

After=network.target

[Service]

User=root

Type=notify

EnvironmentFile=/etc/etcd.env

ExecStart=/usr/local/bin/etcd

NotifyAccess=all

RestartSec=10s

LimitNOFILE=40000

Restart=always

[Install]

WantedBy=multi-user.target - 检查env文件,得到关键的数据目录地址

1

ETCD_DATA_DIR=/var/lib/etcd - 停止etcd,移除数据目录

1

2systemctl stop etcd

mv /var/lib/etcd /var/lib/etcd.bak - 从定时任务触发的命令中发现了etcd的命令行客户端:etcdctl,使用–help看看他的用法,发现一个snapshot restore指令

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38[root@vmw253 ~]# etcdctl snapshot

NAME:

snapshot - Manages etcd node snapshots

USAGE:

etcdctl snapshot <subcommand> [flags]

API VERSION:

3.4

COMMANDS:

restore Restores an etcd member snapshot to an etcd directory

save Stores an etcd node backend snapshot to a given file

status Gets backend snapshot status of a given file

OPTIONS:

-h, --help[=false] help for snapshot

GLOBAL OPTIONS:

--cacert="" verify certificates of TLS-enabled secure servers using this CA bundle

--cert="" identify secure client using this TLS certificate file

--command-timeout=5s timeout for short running command (excluding dial timeout)

--debug[=false] enable client-side debug logging

--dial-timeout=2s dial timeout for client connections

-d, --discovery-srv="" domain name to query for SRV records describing cluster endpoints

--discovery-srv-name="" service name to query when using DNS discovery

--endpoints=[127.0.0.1:2379] gRPC endpoints

--hex[=false] print byte strings as hex encoded strings

--insecure-discovery[=true] accept insecure SRV records describing cluster endpoints

--insecure-skip-tls-verify[=false] skip server certificate verification (CAUTION: this option should be enabled only for testing purposes)

--insecure-transport[=true] disable transport security for client connections

--keepalive-time=2s keepalive time for client connections

--keepalive-timeout=6s keepalive timeout for client connections

--key="" identify secure client using this TLS key file

--password="" password for authentication (if this option is used, --user option shouldn't include password)

--user="" username[:password] for authentication (prompt if password is not supplied)

-w, --write-out="simple" set the output format (fields, json, protobuf, simple, table) - 通过备份脚本中的命令(重要-1)和上一步中的提示,反向拼接还原命令

1

/usr/local/bin/etcdctl --endpoints=https://192.168.20.233:2379 snapshot restore /var/backups/kube_etcd/etcd-2023-07-08-00-00-01/snapshot.db --cacert=/etc/ssl/etcd/ssl/ca.pem --cert=/etc/ssl/etcd/ssl/admin-vmw253.pem --key=/etc/ssl/etcd/ssl/admin-vmw253-key.pem - 很遗憾报了错,说数据目录已存在,旁边运维老哥提示加data-dir参数,于是把刚才得到的参数拼接进去

1

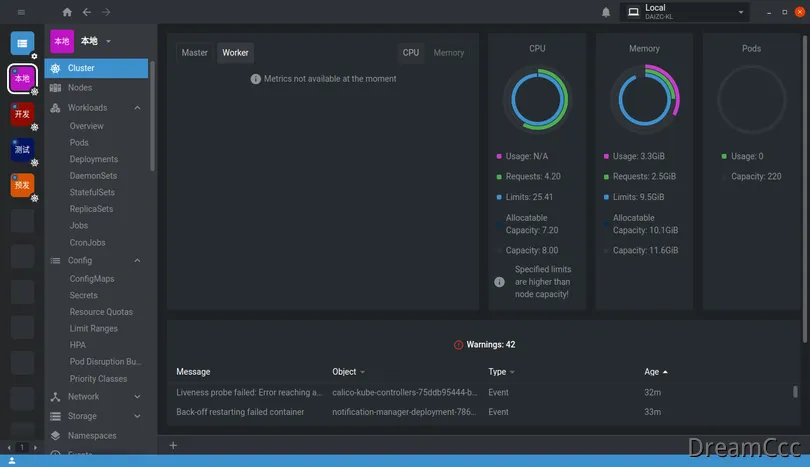

/usr/local/bin/etcdctl --endpoints=https://192.168.20.233:2379 snapshot restore /var/backups/kube_etcd/etcd-2023-07-08-00-00-01/snapshot.db --cacert=/etc/ssl/etcd/ssl/ca.pem --cert=/etc/ssl/etcd/ssl/admin-vmw253.pem --key=/etc/ssl/etcd/ssl/admin-vmw253-key.pem - 这次不遗憾,看起来成功了,随后启动etcd,未见明显异常。kubectl 也马上返回了结果,随后集群很快恢复了

1

2

3

4

5

6

7

8

9

10

11

12

13[root@vmw253 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

949258e9135c 37c6aeb3663b "kube-controller-man…" 3 hours ago Up 3 hours k8s_kube-controller-manager_kube-controller-manager-vmw253_kube-system_b53e0e776bdd23d5be83ec232a105a76_1036

4a3a50cbf1fb registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1 "/pause" 3 hours ago Up 3 hours k8s_POD_kube-controller-manager-vmw253_kube-system_b53e0e776bdd23d5be83ec232a105a76_11

89aa599bc953 registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1 "/pause" 3 hours ago Up 54 minutes k8s_POD_kube-apiserver-vmw253_kube-system_6aa730f99776180fbac39af544cc5c43_11

76914665acbd 56c5af1d00b5 "kube-scheduler --au…" 3 hours ago Up 3 hours k8s_kube-scheduler_kube-scheduler-vmw253_kube-system_ceb9697816522e2427509f5707b24a58_1007

8248bd5e395d registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1 "/pause" 3 hours ago Up 3 hours k8s_POD_kube-scheduler-vmw253_kube-system_ceb9697816522e2427509f5707b24a58_11

[root@vmw253 ~]# systemctl start etcd

[root@vmw253 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

vmw253 Ready control-plane,master,worker 525d v1.23.0

vmw254 Ready worker 525d v1.23.0

vmw255 Ready worker 525d v1.23.0

居然修好了,真实可喜可贺呢:-D